Digging Into Data: The Gems at the Back of the Bookstore

Digging Into Data: The Gems at the Back of the Bookstore

Tracy Turner

Associate Dean for Student Learning Outcomes

Graphs by Bradley Yost, Manager of Institutional Research

Southwestern Law School

For a student-focused school like my institution, Southwestern Law School, LSSSE survey results can sometimes be treated as an affirmation that we are doing a good job when our averages in desirable categories exceed the LSSSE average and the averages at our peer schools. And with the addition of an in-house institutional research expert, we have been able to start digging beneath the surface of the results by disaggregating data not only by demographics but also by cross-referencing answers to different questions within the survey. It feels like the difference between window shopping and burying our heads in the boxes stashed in the back of the bookstore where the real gems are hiding.

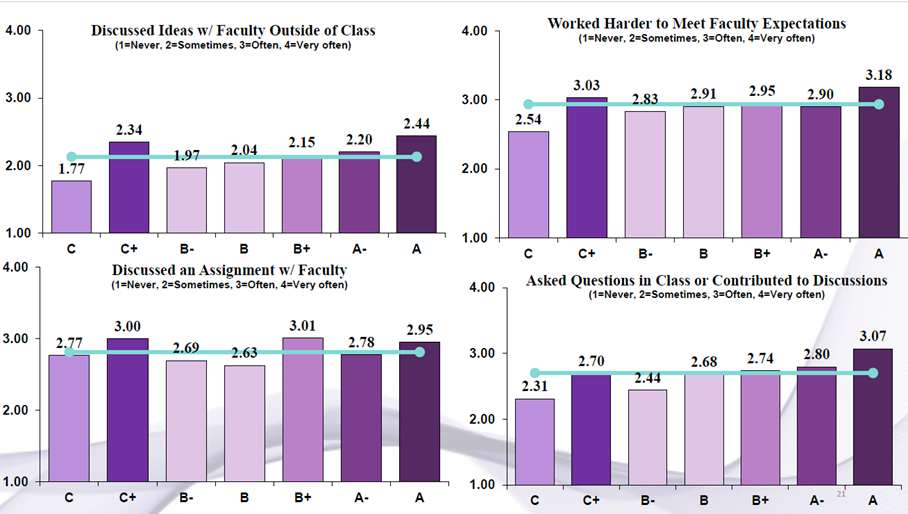

Sometimes the results merely confirmed suspicions. For example, student assessment of their relationships with faculty correlated with their experience in receiving prompt feedback on assignments and with their perception of support for their success. Other times, however, the results were surprising and raised interesting questions about how to support our students.

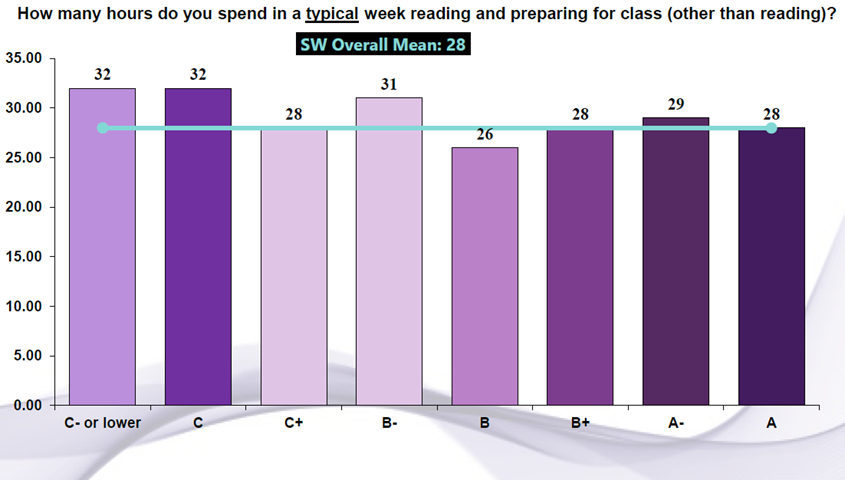

While we saw that GPA correlated fairly well with preparation, engagement, and self-perception of working hard, the correlation was not consistent: in each of these categories, our C+ students reported spending more time and working harder than the B students and in fact were very close to our A students. At C- and below, the correlation was strong.

What is happening with these C+ students that disrupts the correlation and is there something we can do to help them see some of the same payoff from effort that our higher-performing students experience?

What is happening with these C+ students that disrupts the correlation and is there something we can do to help them see some of the same payoff from effort that our higher-performing students experience?

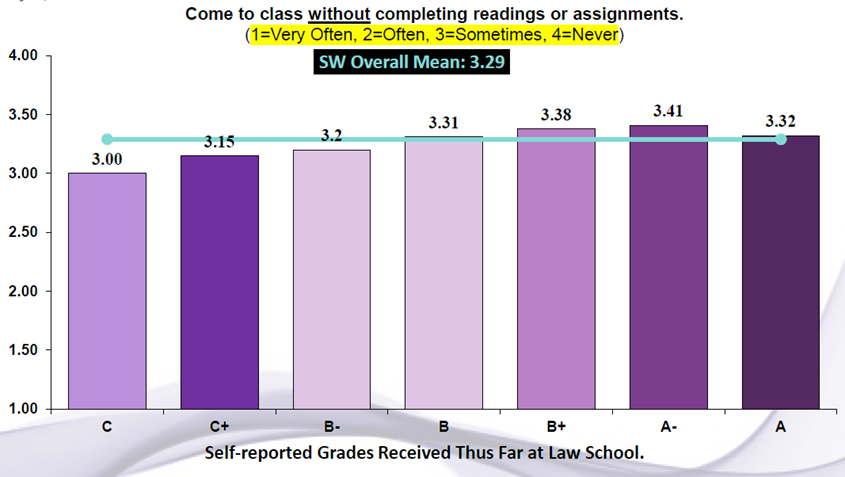

Another important anomaly that surfaced was that students with lower GPAs reported spending more time reading than did B-level students and above, but at the same time reported that they failed to complete the reading more often.

Does this possibly suggest that less assigned reading might enable better performance in this category of students? Whatever the answer, the evidence that our lower-performing students are working hard but struggling to keep up with the reading is important information to consider in how we structure our required curriculum and individual course assignments.

Does this possibly suggest that less assigned reading might enable better performance in this category of students? Whatever the answer, the evidence that our lower-performing students are working hard but struggling to keep up with the reading is important information to consider in how we structure our required curriculum and individual course assignments.

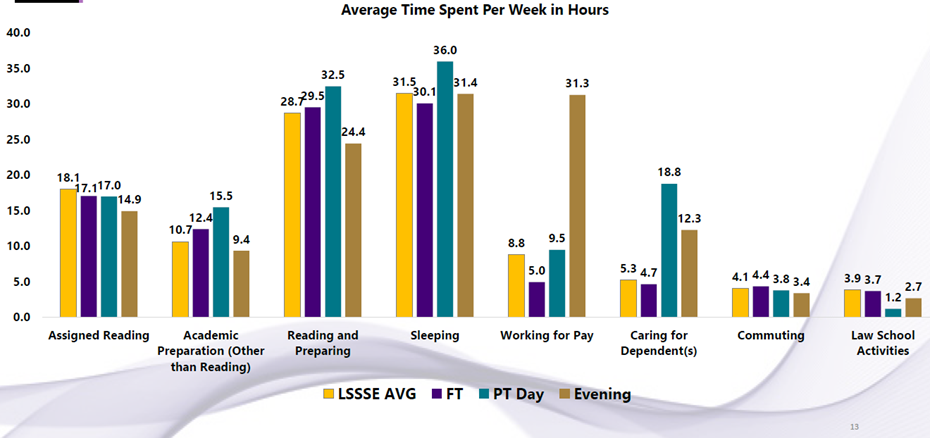

As one last example, we also compared the time that students reported spending on various school-related and personal tasks and learned that, on average, students spent more time commuting than engaging in extracurricular activities, and this result came years after building student housing on campus to address this very issue. And even our full-time students reported spending slightly more time on outside work than on extracurriculars.

This raises an important question about whether we could imagine interventions to decrease our students’ need to commute and find outside work. Next year, Southwestern will also be able to compare LSSSE responses and academic performance data from its in-residence program to data from its new fully online J.D. program ( https://www.swlaw.edu/Online).

This raises an important question about whether we could imagine interventions to decrease our students’ need to commute and find outside work. Next year, Southwestern will also be able to compare LSSSE responses and academic performance data from its in-residence program to data from its new fully online J.D. program ( https://www.swlaw.edu/Online).

We are only on round one with this level of disaggregation, and it will take one or two more repetitions before the information is truly actionable but the questions and thinking it has raised have already challenged some assumptions.

Another new way to use LSSSE that we have discovered is as part of our learning outcomes assessment process. Student answers regarding the extent to which they have been asked to synthesize and organize information and apply theories and concepts to new problems and situations serve as a useful indirect assessment tool for our learning outcomes on legal analysis. Although our initial experience with this was much like our prior experience with survey results – mostly confirming that we are doing well – we can next disaggregate these responses like we have with the other examples mentioned above to discover whether there are GPA correlations or class preparation or engagement correlations. We can thus examine whether student experience with these learning outcomes is consistent and which variables may have an impact.

These new approaches to LSSSE results are helping us use the survey as it was intended to be used – for discovery and improvement in our mission to maximize the success of our students.